Ekapkgs, a poly-repo fork of Nixpkgs

Why fork Nixpkgs?

Nixpkgs is a technical marvel. Boasting over 100k packages, it’s the world’s largest and most up-to-date software repository in the world. (See the next image!) However, this monolithic achievement comes at great cost to maintainability, infrastructure, and inertia. Many of the conventions in nixpkgs have evolved organically from contributors; sometimes these changes have introduced paradigm-shifting abstractions such as NixOS modules, but in general, it creates many similar but different solutions that lack cohesion.

Image source: repology.org

Nixpkgs’ impressive scope has inadvertently led to a situation where ratifying more radical changes and iterating on conventions struggles against the weight of its own size. To change technical conventions, the proposal must go through the NixOS RFC process. Coupled with the RFC process generally taking months to years, the desire to enact change heavily discourages some contributors. Creating an RFC to do smaller improvements involves:

- Drafting an RFC

- Gathering 3 or more shepherds

- Responding to feedback over months or sometimes years

- Getting signoff from the RFC Steering Committee

This is usually a 12-month or longer process with a high chance of rejection.

Nixpkgs’ scale and heavy-weight processes create an environment where innovation already started to stagnate and user experience improvements are ever harder to accomplish.

Tackling the scale of Nixpkgs through multiple repositories

Nixpkgs is a double-edged sword when it comes to packaging. On one hand, Nix knows of all of the dependencies that go into a package, making it able to succinctly communicate “retained” (runtime) dependencies. On the other hand, this deep introspection into all dependencies means that changing a package like glibc or openssl also “rebuilds the world”, as many packages make use of these packages or have it as a transitive dependency.

To mitigate massive package rebuilds, the so-called staging process was created for Nixpkgs.

This workflow batches all high-rebuild changes into a branch called staging.

Periodically, this branch will be “stabilized” by core contributors who attempt to fix broken builds on the staging-next branch.

These package mass-rebuilds are typically in the area of multiple ten thousand and take up to a week, depending on the load on the build infrastructure.

This stabilization effort is generally the biggest cause of regressions in nixpkgs:

Many of the changes applied to staging-next follow little to no code review, and reviewing these changes is too time-consuming anyway due to the rebuild count.

From a contributor perspective, a compromise is made between volunteer burnout and fixing broken builds.

This also has second-order effects such as altering the release process to be compatible with this workflow (RFC85), and the creation of smoke tests (RFC119) to mitigate the most common packaging failures.

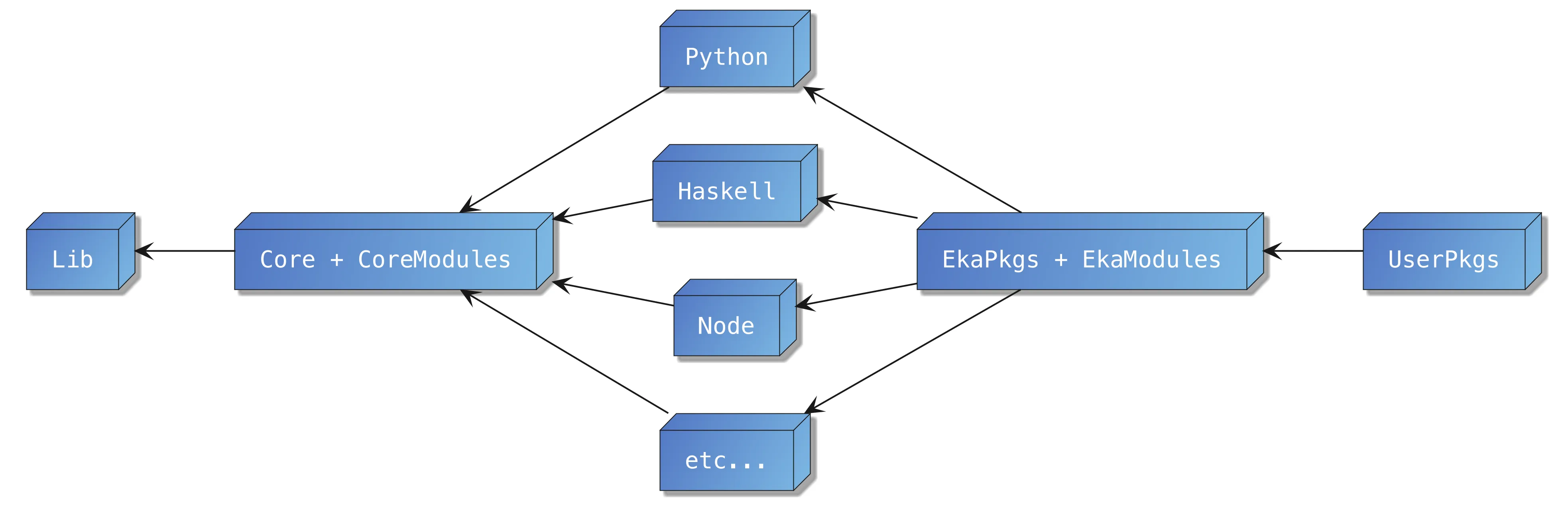

Ekapkgs plans to resolve these pain points by splitting up the monolithic package set into orthogonal repositories:

Lib- Library of nix-only utilities, lacks any knowledge of packagingcorepkgs- “core” development and deployment libraries and tools- Languages - Separate language ecosystems (e.g.

python,haskell, etc.) - Ecosystems - Separate tool ecosystems (e.g.

home-assistant,CUDA) ekapkgs- Capstone package set that integrates all other repos and contains software not appropriate for core or a language repouserpkgs- The equivalent of AUR/NUR. A semi-centralized location for people to iterate on packaging software with lower standards with regard to best practices, closure sizes, or organization

Having smaller package sets will enable very quick updates and iteration. For corepkgs, even large changes only impact a few thousand builds thus removing the need to do the staging branch workflow altogether. Instead the staging workflow will be replaced by updating pins to upstream package repositories. These stabilizations to downstream repositories will be smaller in scope and similarly can be merged once regressions are addressed.

The division of packaging responsibility will also be useful in managing code quality, stability, and package scope.

As you move more toward corepkgs, you will get fewer breaking changes, fewer broken packages, more tested packages, and higher packaging quality.

As you move toward ekapkgs, you will get more packages available at the cost of those packages likely having less polish, less testing, and more breakages.

This divide will allow for users who prioritize security and stability to track corepkgs, while users who prioritize software availability can consume ekapkgs.

Of course users can create their own distribution on top that integrates package collections from other repositories of a different mixture that meets their requirements: robotpkgs, aipkgs, hpcpkgs, etc.

Make Package Curation Easy

The process for determining if a PR is sufficient in nixpkgs is largely left as an exercise to a maintainer or committer in nixpkgs. The CI (ofBorg) largely enforces that you didn’t break evaluation of the package set nor did you break the build of the package being changed. However, all other aspects of package changes - Downstream builds, realized build changes, coupled package changes - are left to the maintainer to make a manual and often largely uninformed decision. Inspecting these changes is often very error-prone, inconsistent, potentially compute and storage expensive, and manual. Reviewing PRs for nixpkgs (the number of open PRs is always in the thousands) is a very exhausting process for both the PR authors and the reviewers.

Maintaining hundreds of thousands of packages is a monumental task. This task requires tooling that minimizes the challenges of this endeavor. For ekapkgs, we are developing eka-ci, a tool that aims at optimizing the process of determining if and when a package update reaches the state “good enough to merge”. Specifically, it checks downstream package builds and changes to the built package outputs. This introspection should go a long way to informing the reviewer in much greater detail what the ramifications of a PR are.

Make Downstream Repositories a First-Class Citizen

Any business that adopts Nix will eventually run into an issue of how to curate all of the software they are developing and deploying. Generally, this will take the form of one or many overlays which provide the packaging needed to build the software. Being able to leverage a massive repository of software is one of the main advantages of nixpkgs. With overlays, one can effectively “extend” such a package set with their own software in a near frictionless manner. Having ekapkgs natively structured as a collection of repositories means that extending its usage will be the same as how corepkgs gets consumed within the ekala ecosystem.

However, heavy flake usage has a philosophical shift from composition to reproducibility. In today’s Nix landscape, flakes are king outside of Nixpkgs. Many projects enjoy the freedom to pin their few dependencies and obtain a means to generate a developer shell, a package, overlay, or NixOS modules. Trying to bridge the gap between flakes and overlays is achievable but by no means the expected convention. Moving towards a “composition through overlays” paradigm will be a slow but necessary process.

Ekapkgs will be doing a divergent paradigm shift in allowing for most package scopes to respect a config.overlays.<scope> option (proposal with examples) which allows for repositories to do more targeted overlay expansion.

For individuals who commonly want to package Python, CUDA, or many other “context-dependent” packages they will have the necessary levers to transparently change or extend these package scopes with their own packages.

Encouraging Process Improvements

Another significant change is a shift from a “Request For Comments” to focused enhancement proposals. Instead of needing to make a public plea and trying to get buy-in from other contributors; the shift will be toward having a dedicated body of individuals who will act as shepherds for all Ekala Enhancement Proposal (EEPs). This committee will be more empowered to determine suitability, if the proposal is aligned with the goals of the project, and give actionable feedback on direction and approval. Of course community feedback will always be welcome, but removing the need for other contributors to volunteer themselves allows for the mundane proposals to have much higher chances of success.

In addition, there’s the ability to create an “Informational Proposal” which intends to identify a problem and its ramifications without proposing a solution. Sometimes being able to describe what the problem is and how it affects people or processes is the first step to arriving at a satisfactory resolution.